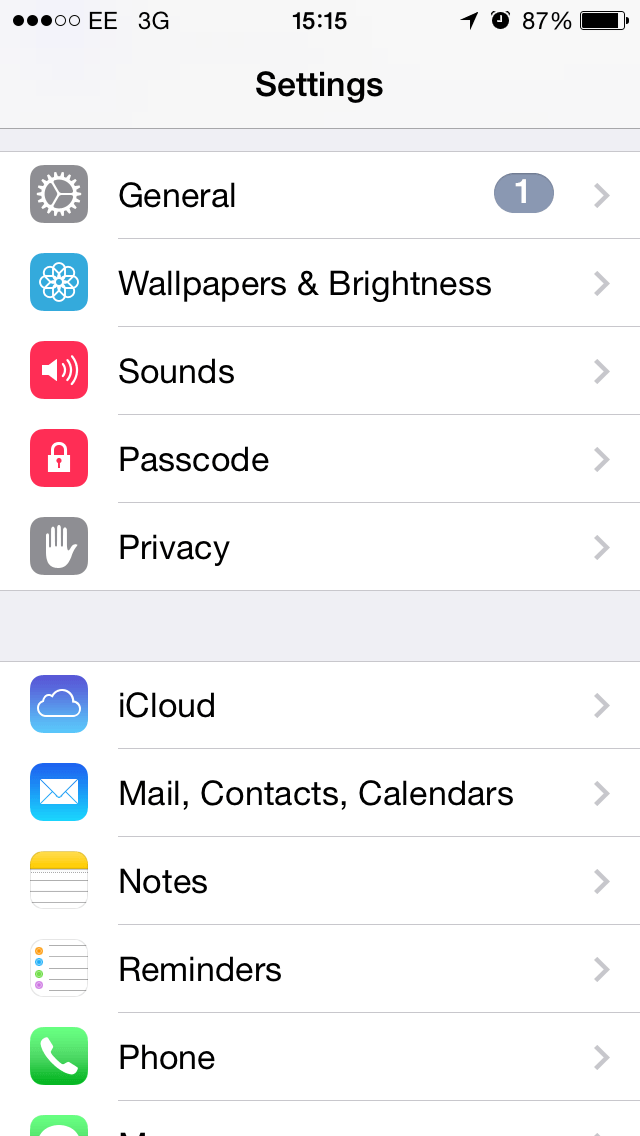

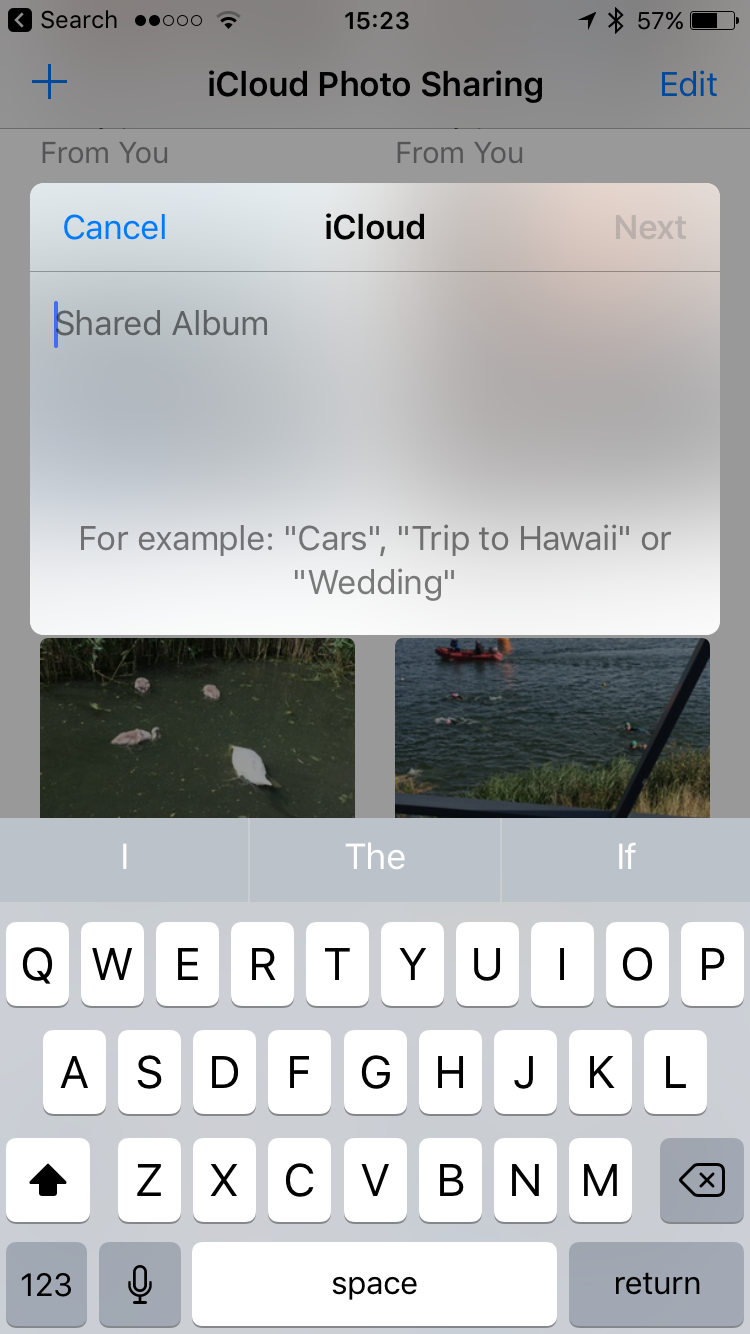

Scanning photos uploaded to iCloud in order to spot CSAM would make sense and be consistent with Apple’s competitors.īut Apple is doing something a bit different, something that feels more invasive, even though the company says it’s meant to be less so. Scanning images for CSAM isn’t a new thing - Facebook and Google have been scanning images uploaded to their platforms for years - and Apple is already able to access photos uploaded to iCloud accounts. In early August, Apple announced that the new technology to scan photos for CSAM will be installed on users’ devices with the upcoming iOS 15 and macOS Monterey updates. Apple’s child protection measures, explained

And then Apple can figuratively reach into that pocket and into that iPhone to make sure your photo is legal. You can buy an iPhone for a considerable sum, take a photo with it, and put it in your pocket. But it’s also yet another reminder that we don’t own our data or devices, even the ones we physically possess. So Apple has been doing a bit of a damage control tour over the past week, admitting that its initial messaging wasn’t great while defending and trying to better explain its technology - which it insists is not a back door but in fact better for users’ privacy than the methods other companies use to look for CSAM.Īpple’s new “expanded protections for children” might not be as bad as it seems if the company keeps its promises. Some say it builds a back door into Apple devices, something the company swore it would never do.

The new scanning feature has also confused a lot of Apple’s customers and, reportedly, upset many of its employees. While fighting against child sexual abuse is objectively a good thing, privacy experts aren’t thrilled about how Apple is choosing to do it. Apple, the company that proudly touted its user privacy bona fides in its recent iOS 15 preview, recently introduced a feature that seems to run counter to its privacy-first ethos: the ability to scan iPhone photos and alert the authorities if any of them contain child sexual abuse material (CSAM).

0 kommentar(er)

0 kommentar(er)